Security Operation Centers (SOCs) often serve as the backbone of cyber security for organizations. Security analysts continuously collaborate to detect, investigate and respond to cyber threats. Yet, according to a Gartner report of 2024, many SOCs experience problems such as data overloads[i], lack of contextual insights for security alerts, alert fatigue, and a persistent shortage of skilled cybersecurity professionals[ii].

However, SOC operators might soon get assistance from an emerging technology: AI agents leveraging Agentic AI. These agents can autonomously detect, respond to, and mitigate security and fraud threats in near real-time[iii], all while being trained on large volumes of data to counter the latest threats[iv]. This advancement could significantly reshape the way SOCs operate, turning static automation into flexible threat management. It may also offer a solution to the increasing shortage of security professionals. Additionally, this development could help smaller businesses improve their security posture without high investment in hiring talented security experts.

At the same time, new forms of technology might also create new vulnerabilities. Agentic AI might increase security risks in several ways. Unlike traditional AI systems that operate within controlled environments, AI agents leveraging Agentic AI interact with various systems and external data sources, expanding the attack surface. Additionally, the introduction of Agentic AI raises new implementation and regulatory challenges that are not yet well defined.

Defining Agentic AI

Since the first wave of Large Language Models (LLMs) was released to the public in 2023, AI solutions have been advancing rapidly. What began as relatively simple LLMs that could generate text and images quickly evolved into AI driven agents that can reason, act, and learn autonomously. Definitions of Agentic AI vary considerably across sources. Russel (2021) refers to an “Intelligent Agent”, what aligns with the concept of Agentic AI, as “an agent that acts appropriately for its circumstances and its goals, is flexible to changing environments and goals, learns from experience, and makes appropriate choices given its perceptual and computational limitations.”[v]

While formal definitions of Agentic AI may vary, these systems typically exhibit four core capabilities: perceive, reason, act, and learn.[vi]

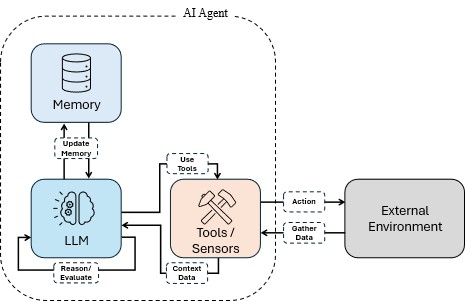

Perception enables the agent to gather and interpret data from various sources (figure 1).

This interpretation puts the data into the proper context and extracts meaningful insights from the environment. It can do so using the tools or sensors at its disposal to collect additional data and enrich its understanding of the situation. It could also retrieve past experiences by using its memory to better contextualize new information. Reasoning empowers them to make informed decisions, understand objectives, and strategically decide how to use available tools to reach their goals. The underlying LLM enables the agent to process complex patterns and information and adapt an effective strategy. Action reflects the agent’s ability to carry out tasks using its toolkit, such as through API calls, system integrations, or other interfaces. Finally, learning allows agents to continually improve by analyzing their actions and incoming data, becoming smarter and more efficient over time.

From Automation to Agentic AI: The Next Step

Agentic AI didn’t emerge suddenly. It represents the next phase in an ongoing trend toward more advanced automation and AI technologies. To grasp the context, it’s useful to review the origins.

- Early Forms of Automation (1960s–1980s): Early automation relied heavily on scripts and rule-based systems[vii]. These systems were effective but inflexible. They performed exactly as programmed, which made creating and maintaining rules labor-intensive. Examples include batch job scheduling and rule-based email filters.

- Machine Learning (1990s–2010s): From the 1990s to 2010, there were significant developments in statistical models and machine learning techniques. Thanks to advances in algorithms and the rise of deep learningvii, these systems continually improved, adapting to new data and evolving beyond their original programming. This laid the groundwork for even smarter and more autonomous technologies.

- Generative AI (2020s): When looking at the period around early 2020, significant advancements in Generative AI[vii] could be perceived. These so-called GenAI models could produce text, images, or code, making them much more versatile than traditional Machine Learning (ML), yet still limited by their training and prompts.

- Agentic AI (emerging now): The latest trend appears to be developing AI systems capable of reasoning and working independently. While the turning point where AI has a human-like level of intelligence has not yet been reached, Agentic AI seems to be the next step toward more autonomous systems that can support human capabilities by taking over repetitive or complex tasks, relieving humans to focus on more critical tasks. Agentic AI systems combine reasoning, goal-orientation, and tool orchestration. Instead of passively responding to prompts, they are able to decide how to achieve a goal and adapt their strategies as new information arises. Since this technology is relatively new, it is on the leading edge.

To better understand the differences between automation, traditional AI, and Agentic AI, let’s look at a simple and practical example: managing emails.

- Automation: Automation enables the user to create a rule that moves every email containing the keyword invoice into a finance folder. This works reliably for that specific condition but cannot adapt to new situations.

- Non-agentic AI system: A non-agentic AI agent would typically enable automatic analysis of incoming emails. It scans the content and categorizes them by urgency, automatically sorting them into folders like urgent, normal, or low priority. However, it still only performs a single predefined task: classifying messages.

- Agentic AI: An AI agent leveraging Agentic AI enables a managed mailbox without the need to handle rules manually. Instead, the agent is expected to reason about the appropriate action for each scenario. For example:

- When an email sends an invitation for a meeting, the agent checks the calendar, finds an open slot, and suggests a time.

- If a suspicious email is detected, the agent further investigates by reviewing past communications and checking external sources. Once it confirms it is a phishing attempt, it deletes the message.

- The agent can repeat these steps, adjusting its strategy when one approach fails, until it achieves its goal or determines that human intervention is needed.

While automation follows set rules and traditional AI handles specific tasks, Agentic AI operates independently towards goals, managing multiple tools and changing its reasoning as conditions evolve[viii]. In a SOC setting, applying Agentic AI could mean the difference between just processing alerts and actively investigating and solving them.

Different Ways of Configuring Agentic AI

Depending on operational needs and the complexity of the threat environment, AI agents leveraging Agentic AI may be deployed in various configurations. Some examples include a single agent, an all-in-one agent, or even a coordinated network of specialized agents[ix]. The best setup for a SOC depends on its specific goals, maturity level, and the difficulty of the threats a SOC faces.

Single-Agent Setup

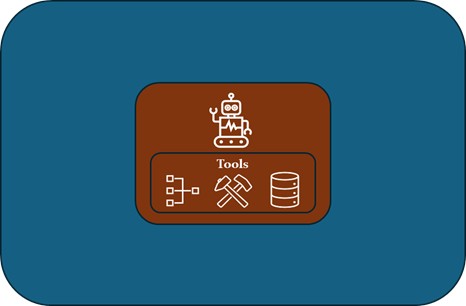

In this setup, a single agent manages its responsibilities independently without assistance from other agents (figure 2).

The agent monitors alerts, conducts analysis, and can initiate actions by itself when necessary. This configuration can be enough for environments with lower alert volumes or well-defined, repetitive tasks that tend to be less complex. It can serve as a starting point for Agentic AI implementation, as it is considerably easier to deploy compared to multiple agents.

Multi-Agent Configuration

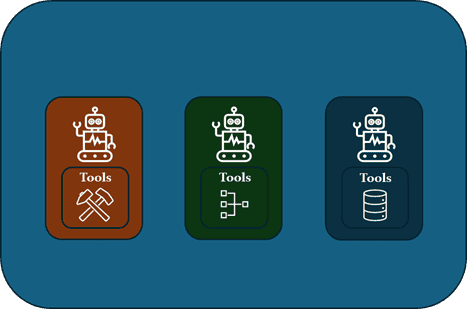

Multiple agents enable teamwork by working together (figure 3).

Each agent has a specific role with its own responsibilities. For example, one agent might focus on analyzing alerts, while another handles network-related incidents. When the first agent identifies an alert that needs action, it passes the task to the appropriate specialist agent to complete it. This approach improves efficiency and scalability.

Orchestrated Multi-Agent Setup

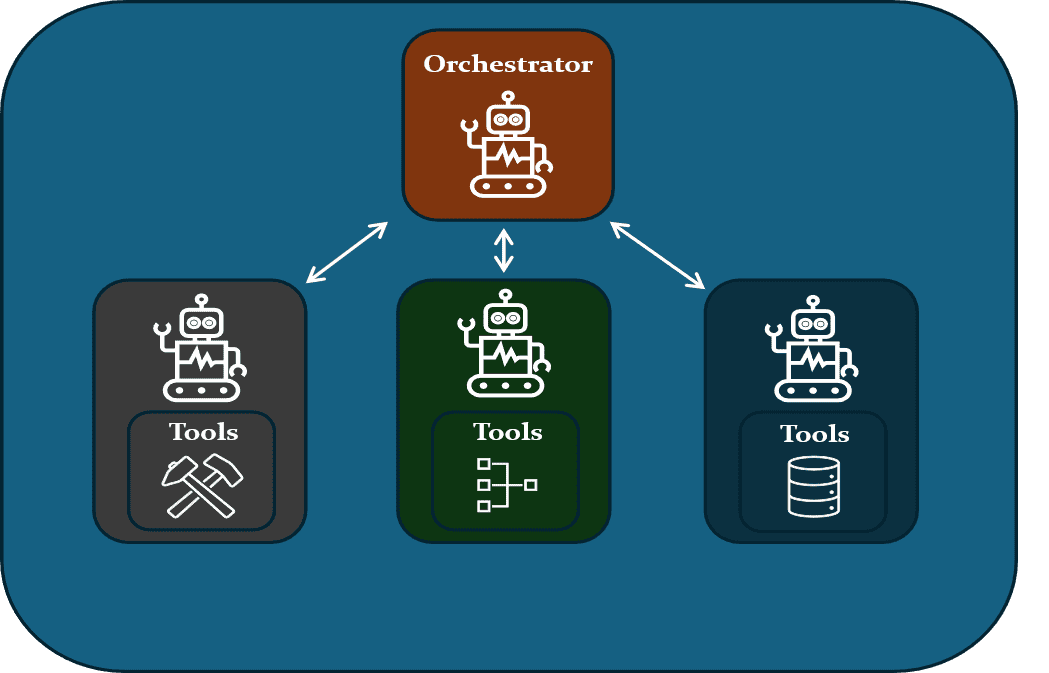

This setup is the most complex setup among the configurations mentioned. The team of agents is led by an orchestrator agent who acts as a coordinator (figure 4).

agent directs various specialized agents

When an alert or goal is received, the orchestrator develops a response plan and assigns tasks to specialized agents accordingly. It not only distributes work but also determines the best way to solve the problem. This approach more closely resembles how a SOC team lead coordinates efforts among analysts. Although this setup is more challenging to implement, it is more effective for complex and dynamic threat environments. The challenge lies in the fact that the orchestrated Agentic AI setup allows multiple specialized agents to collaborate under the guidance of one orchestrator, ensuring tasks are handled efficiently and in parallel. This approach improves scalability, accuracy, and resilience compared to a single-agent system that manages its tasks by itself.

Advantages of Agentic AI within a SOC setting

Enhanced efficiency

Agentic AI improves workflow efficiency by automating tasks such as alert triage and incident response, with uses in cybersecurity for autonomous threat detection and response[iv]. This efficiency could reduce costs associated with system deployment. Rather than just simple automation, Agentic AI can reason independently and orchestrate a variety of tools to achieve its goals and interpret what it observes.

More focus on important alerts

SOC analysts often deal with a multitude of alerts, which can lead to alert fatigue. Agentic AI tools can reduce this burden by handling lower-priority alerts, allowing human SOC analysts to concentrate on the most critical threats. This division of responsibilities ensures that important incidents get the attention and expertise they need.

Continuous detection

The ability to operate 24/7 is essential for a SOC. While human intervention remains necessary for the most critical alerts, an Agentic AI agent may take over during times of low staffing, ensuring continuous monitoring and response. This not only improves the SOC’s efficiency but also minimizes the chance of missing important alerts outside normal hours.

Risks of implementing Agentic AI within a SOC

Increased attack surface

AI systems are not invulnerable to attacks[x]. In fact, they are part of an organization’s attack surface as well. Since these agents have a supply of tools at their disposal, it is important to ensure that no one can manipulate them. Poor security practices could lead to unauthorized access, data leaks, and other vulnerabilities. Bad integration or system flaws have caused sensitive data leaks, and malicious actors or coding errors can manipulate AI agents leveraging Agentic AI, resulting in disruptions or losses[xi].

High initial implementation costs

Implementing agentic AI systems in a SOC poses a significant challenge for most companies due to the lack of in-house expertise. This includes the costs of selecting and implementing the right framework and ensuring secure, reliable API integrations of which both require specialized expertise and careful alignment with existing security policies. The difficulty of integrating agentic AI into existing systems and workflows is substantial. Organizations often have legacy systems that are incompatible with the latest AI technologies, which can lead to costly modifications to ensure the AI operates effectively.

The initial costs of implementing Agentic AI systems can be high, reaching tens of thousands of euros, which could act as a barrier for many organizations. Small to medium enterprises (SMEs) with limited finances will particularly feel this obstacle. These costs include purchasing the technology, integrating it into existing systems, training staff, and covering expenses for updates and security. The high initial costs of AI technology might deter adoption, especially if the return on investment (ROI) is uncertain or takes a long time. Additionally, the need for specialized hardware, software, and skilled personnel to operate AI systems adds to the financial burden.

Regulatory and Compliance Challenges

AI is advancing rapidly and presents complex regulatory and compliance challenges. Governments and agencies are quickly establishing frameworks to ensure these technologies are used responsibly as AI systems increasingly influence decisions related to public safety, privacy, and fairness. This requires adapting to a shifting regulatory landscape that varies significantly across regions and industries. To avoid fines and protect their reputation, organizations need substantial resources and ongoing oversight.

Conclusion

The use of Agentic AI could be a major step forward for SOCs. Its blend of perception, reasoning, action, and learning turns static automation into flexible threat management. With perception and reasoning, they can interpret complex data and make informed decisions, while their ability to act and learn enables them to respond autonomously and continually improve over time. Thus, increasing efficiency, enabling continuous detection, and reducing alert fatigue. While all that sounds promising, these benefits also come with challenges like a larger attack surface, high initial costs, and regulatory uncertainties.

Agentic AI could be the next phase in automation and significantly transform how SOCs operate. Like any new technology, it needs time to develop, so early adopters will likely start experimenting with integrating it into their environments. As it progresses, a smoother integration along with established safeguards and standards can be expected.

Security teams must carefully weigh the risks and benefits of Agentic AI, ensuring it is implemented cautiously with strong protection. When used properly, Agentic AI could be more than just a tool; it could become a vital ally in safeguarding our digital future. Organizations should perceive, reason, act and learn when implementing Agentic AI in a SOC: start small, gradually expand, test thoroughly, and establish clear governance and security policies before fully deploying Agentic AI within their SOCs.

References

[i] Nunez, J. and Davies, A. (2024) Hype cycle for Security Operations, 2024, garnet.com. Available at: https://www.gartner.com/en/documents/5622491.

[ii] Krantz, T. and Jonker, A. (2025) What is alert fatigue?, IBM. Available at: https://www.ibm.com/think/topics/alert-fatigue.

[iii] Jakkal, V. (2025) Microsoft Sentinel: The Security Platform for the agentic era, Microsoft Security Blog. Available at: https://www.microsoft.com/en-us/security/blog/2025/09/30/empowering-defenders-in-the-era-of-agentic-ai-with-microsoft-sentinel/.

[iv] Kshetri, N. (2025) ‘Transforming cybersecurity with agentic AI to combat emerging cyber threats’, Telecommunications Policy, 49(6), p. 102976. doi:10.1016/j.telpol.2025.102976.

[v] Russell, S., & Norvig, P. (2021). Artificial Intelligence: A Modern Approach, Global Edition.

[vi] Pounds, E. (2025) What is Agentic Ai?, NVIDIA Blog. Available at: https://blogs.nvidia.com/blog/what-is-agentic-ai/.

[vii] Olabiyi, Winner & Akinyele, Docas & Joel, Emmanuel. (2025). The Evolution of AI: From Rule-Based Systems to Data-Driven Intelligence.

[viii] Weng, L. (2023) LLM Powered Autonomous Agents, Lil’Log. Available at: https://lilianweng.github.io/posts/2023-06-23-agent/.

[ix] Sentinel (2025) Agentic AI: Beyond the buzzword – what IT leaders need to know | sentinel, sentinel.com. Available at: https://www.sentinel.com/resources/blog/sentinel-blog/2025/03/05/agentic-ai–beyond-the-buzzword—what-it-leaders-need-to-know.

[x] OWASP (ed.) (2025) Agentic Ai – threats and mitigations, OWASP Gen AI Security Project. Available at: https://genai.owasp.org/resource/agentic-ai-threats-and-mitigations/.

[xi] Autoriteit Persoonsgegevens (2024) Caution: Use of AI chatbot may lead to data breaches, Autoriteit Persoonsgegevens. Available at: https://www.autoriteitpersoonsgegevens.nl/en/current/caution-use-of-ai-chatbot-may-lead-to-data-breaches.

Disclaimer

The authors alone are responsible for the views expressed in this article and they do not necessarily represent the views, decisions or policies of the ISACA NL Chapter. The views expressed herein can in no way be taken to reflect the official opinion of the board of ISACA NL Chapter. All reasonable precautions have been taken by the authors to verify the information contained in this publication. However, the published material is being distributed without warranty of any kind, either expressed or implied. The responsibility for the interpretation and use of the material lies with the reader. In no event shall the authors or the board of ISACA NL Chapter be liable for damages arising from its use.