Introduction

Apple, Microsoft, and Google are ushering in an era of artificially intelligent (AI) smartphones and computers designed to automate tasks such as photo editing and sending birthday greetings (B.X. Chen, 2024). However, to enable these features, they require access to more user data. In this new approach, Windows computers will frequently take screenshots of user activities, iPhones will compile information from various apps, and Android phones will listen to calls in real-time to detect scams. This raises the question: Are you willing to share this level of personal information? The ongoing boom in artificial intelligence (AI) is gradually infiltrating more and more applications. This, in turn, raises privacy concerns regarding the vast amounts of data required to train these AI models. One of the proposed solutions is to decentralize learning by allowing each device to train a model locally on its own data without sharing it. These local models are then aggregated to form a new global model. This privacy-friendly framework, called Federated Learning (B. McMahan et al., 2017) has been introduced to address this problem. While this new framework is very useful for a future in which AI models can be trained in a more privacy-friendly manner, it does not guarantee security from attacks. Based on the works of A. Shokri Kalisa, this article covers how attackers can use backdoor attacks to poison the model resulting from FL and what steps can be taken to make it more robust against these attacks.

The rapid boom of AI

Based on the past years, artificial intelligence has grown to be a significant part in our daily lives. It has been used into many of our daily habits and objects. From smartphones to highly advanced industrial processes. Yet, many still wonder: What is artificial intelligence exactly and what does this imply for the future.

AI is a difficult term to define. In fact, many different definitions are going around all the time. A commonly seen definition is that of a technology that allows machines to mimic a variety of intricate human traits. It enables a computer to perform cognitive functions, like as perception, reasoning, learning, interacting with the environment, solving problems, and even creative expression, that are traditionally associated with human minds.

An AI model is typically created by training on large amounts of data. It is basically a continuous learning cycle where the model attempts to minimize its prediction errors by adjusting its parameters. In doing so, it is able to recognize and predict patterns that can be seen across the data. Through this process of training, AI systems improve their performance and adapt to new scenarios capabilities over time.

As of now, many industries are integrating AI into their operations to enhance productivity. For instance, Large Language Models (such as BART, GPT) are revolutionizing various industries, including customer service, content creation and personalized education. It allows these industries to automate tasks, improve communication, and enhance user experiences. AlphaFold is changing the field of structural biology by enabling to interpret genetic sequences and determine the 3D structure of proteins. Its ability to rapidly and accurately predict protein structures has the potential to accelerate scientific research and address critical challenges in medicine and biotechnology. (K. M. Ruff et al., 2021). On top, we might find ourselves in a future someday where AI has taken over the responsibility of driving us from A to B in autonomous cars (A. Gupta et al., 2021).

What is the challenge of AI versus data privacy?

Since AI usually requires large amounts of data to train on, it used to be a hurdle for researchers to gain access to such large databases. The coming of Big Data technologies resolved this problem and helped AI reach levels it has not reached before (A. Shokri Kalisa et al., 2024). With more data available, AI models have been able to achieve capabilities that have not been reached before. For instance, in the classical way of training AI, participants interface with a central server to upload their data which is subsequently used for model training. This personal data can consist of things like images, videos, text or any other form that gives away information about the user. The problem with this setup is that users give away their personal data without having any insights what happens with the data. Incidents involving the manipulation and utilization of user data in recent years such as with Cambridge Analytica have highlighted the impact of the problem and raised concerns around privacy and data security (J. Isaak et al., 2018).

What is Federated Learning?

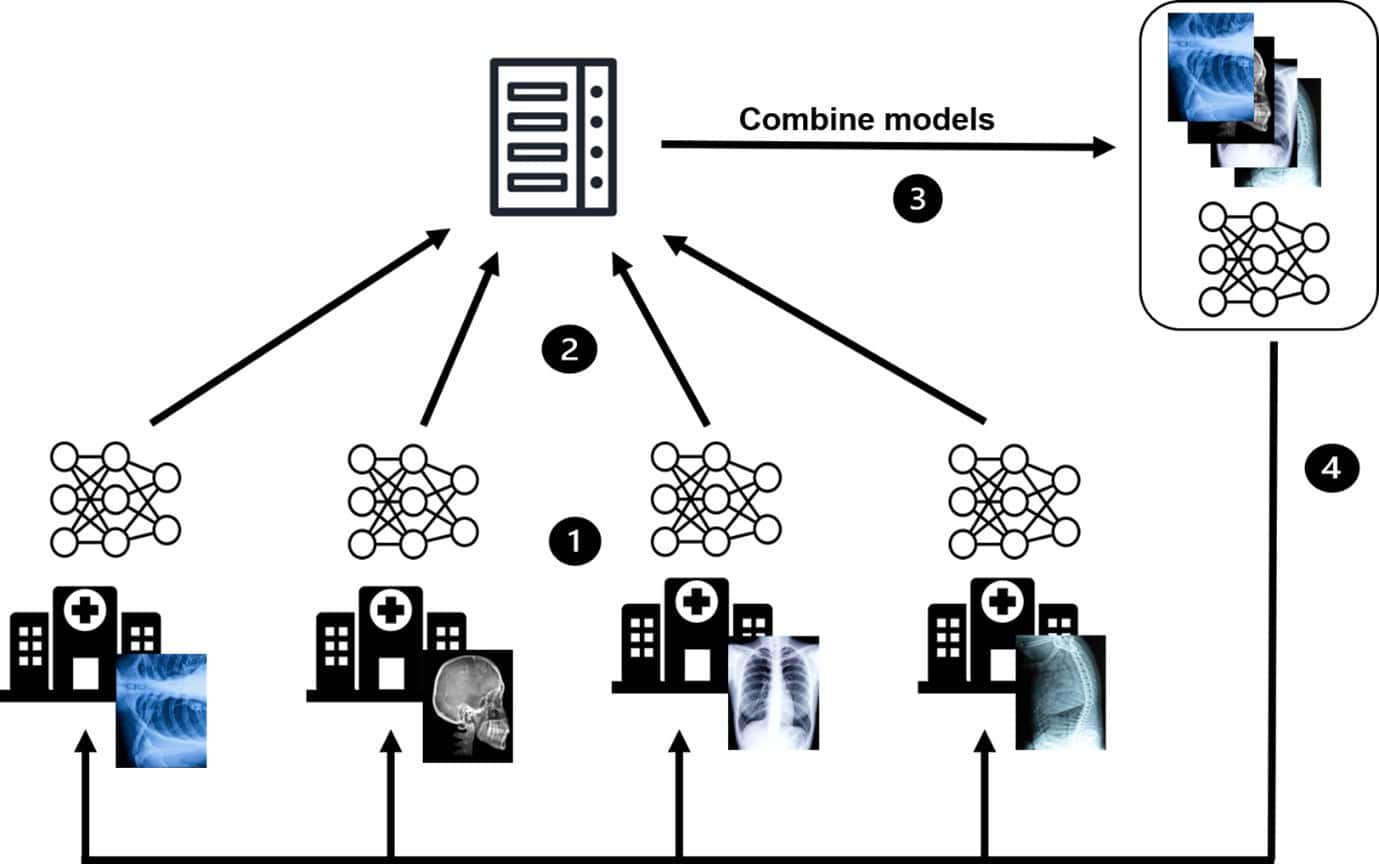

This is where Federated Learning (FL) comes in. FL has been designed as a framework to train AI models in a privacy friendly manner (B. McMahan et al., 2017). It does so by letting each user train a model on their own local data on their own device (e.g., mobile devices, laptops). For instance, a user trains a text prediction model on their own phone based on their own data. Now, the central server selects some of these users to share their local model with the server. The server then combines the incoming models and averages them into a centralized global model. The local models are averaged to balance out their individual contributions (E. Bagdasaryan et al., 2020). The advantage of the method is that the server only sees the model, which does not reveal any direct details about the data it was trained on. Thus, we can now create a model without seeing user data. instead of being collected on a centralized server. This can be advantageous when sharing the data is not possible due to security or privacy issues (M.F. Criado et al. 2022).

An instance of the use of FL can be seen in GBoard by Google (B. McMahan & D. Ramage, 2017) . Google’s virtual keyboard software, Gboard, uses FL to improve its word prediction and autocorrect capabilities whilst using the FL framework. This method avoids the need to send raw user data to a central server by having each user’s device locally build a customized AI model based on their typing history and behaviour.

What do Backdoor Attacks have to do with Federated Learning?

While the coming of FL improves on some of the privacy concerns, its decentralized design also brought new threats and challenges. One of the challenges faced is the threat of backdoor attacks. In a backdoor attack, a malicious participant (or participants) aims to compromise the integrity of the global model by injecting a “backdoor” pattern into their local training data. It then proceeds to train these “poisoned” samples to output the wrong class. After the attacker trains its local poisoned model, it sends its update to the central server. The server then aggregates all updates, including the poisoned updates sent by the attacker. Since the attacker trained on malicious behaviour, this behaviour can be propagated into the global model when aggregated. This creates a serious security problem, as it enables the attacker to alter or influence the model’s outputs for specific inputs.

To understand what backdoor attacks can do, let’s look at a simple example with image classification. Imagine this model is learning to tell apart different kinds of pictures, like dogs and cats. In a backdoor attack, an attacker decides to trick the model by messing with the pictures of dogs. They might add something small, like a special dot, to all the dog pictures in their own collection. Then, they teach their version of the model that every time it sees a dog picture with this dot, it should say it’s a cat, not a dog. Next, the attacker’s poisoned local model is mixed with other benign models when the server averages them all. Because the global model learns from all local models, including the poisoned one, the backdoor behaviour is propagated into the central model. Now, whenever the central model sees any dog picture with that special dot, it thinks it’s a cat.

So why does it matter that FL is resistant against backdoor attacks? Well, imagine when FL is being employed in automatous vehicles that use AI models to drive around. Attackers could trick the model into misinterpreting a stop sign with a hidden trigger, making it think that the sign indicates a speed limit of 100 km/h instead of signalling a stop. Other examples of using backdoors can be found in text prediction models where researchers managed to get certain bias or racial outputs based on certain trigger input sentences. (Z. Zhang et al., 2022).

The loss of trust in FL would be detrimental it undermines the potential benefits of this privacy-preserving AI technique. FL holds promise for training models on decentralized data without compromising user privacy. However, without trust, individuals may resist participating in FL initiatives, hindering the development of AI models. Additionally, if people don’t trust FL, it might not be used in important areas like healthcare and finance. These are places where keeping information private and secure is essential.

How can pixel intensities enhance backdoor attacks?

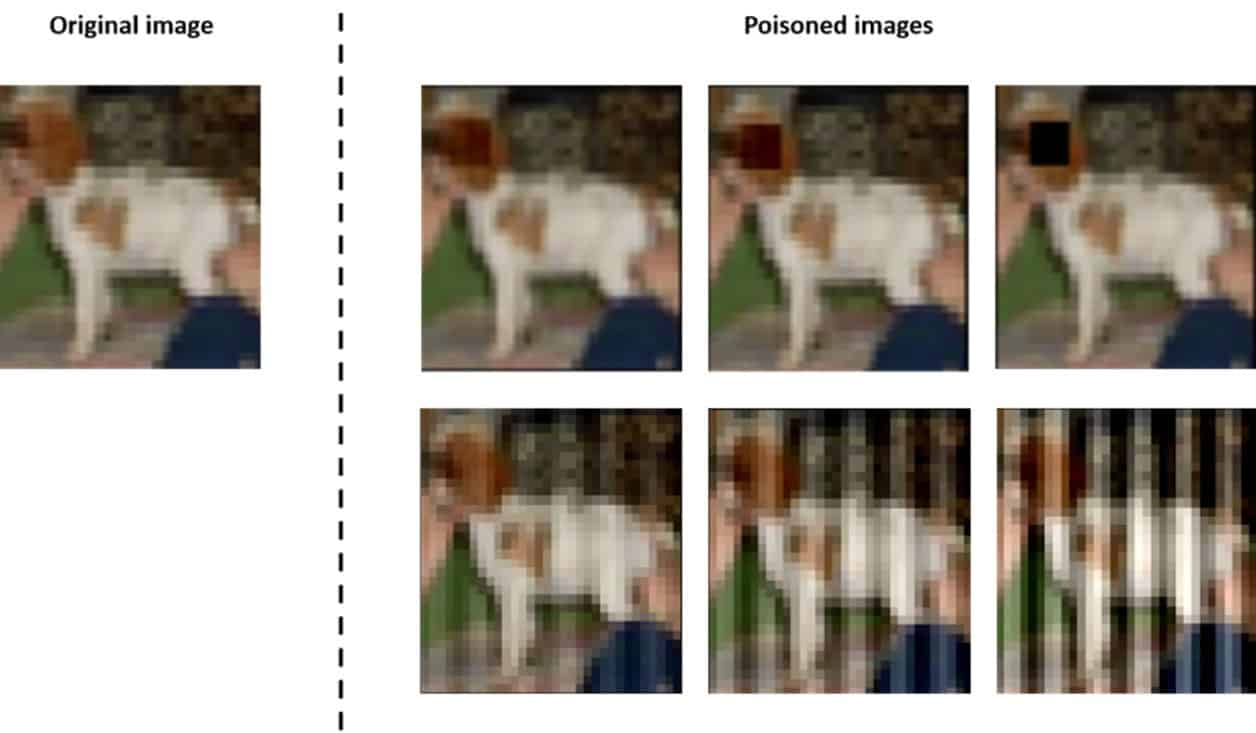

You may think now that triggers placed on stop signs are easily noticeable, recent studies show that attackers can develop sophisticated triggers that are difficult to detect by the human eye. This increases the likelihood of them going unnoticed, thereby raising the risk of potential harm. In the works of A. Shokri Kalisa, trigger intensities are introduced. It is a new approach to enhance the effectiveness of the backdoor attack while also adjusting the visibility of this trigger. So, to explain what a trigger intensity is, we can think of a light switch. In this analogy, a higher trigger intensity corresponds to a “brighter” trigger, one that is highly visible within a poisoned image. On the other hand, a low trigger intensity equates to a “dimmer” trigger, making it nearly indistinguishable from original image. Trigger intensity essentially controls the extent to which the backdoor trigger is perceptible to both the human eye and ML models. Figure 3 gives some examples of two triggers (a square and a striped pattern) at different trigger intensities.

So why should we use different trigger intensities in backdoor attacks? First of all, the idea is to train the local model on a more “dim” trigger. By conditioning the model on these dimmer triggers, it becomes accustomed to recognizing them. However, if a poisoned image with a brighter trigger than what the model was trained on is presented, it may result in a stronger response to the backdoor. This is because the model is tuned to detect dim triggers, and encountering a brighter one could trigger a more pronounced backdoor effect.

Secondly, reducing the visibility of a trigger might open up the possibility for invisible triggers. This means that the machine is able to recognize the backdoor however, the trigger itself is not or barely visible by the eye. As a result, it becomes much tougher for a defender to know whether a random error occurred or the model has been poisoned.

Securing Federated Learning: Essential Best Practices

Whilst the risks mentioned are worrisome, it does not mean that the future for FL is doomed. In fact, many different proposed solutions have been submitted by ongoing research. In this section, we introduce some tips and trick for secure use of FL:

- Increase User Base: A larger number of users can mitigate the impact of potential attackers. With a high user count, the influence of any single attacker is minimized because the average is computed from all local models. Most attacks performed in research are tested on smaller user bases, where the chances of success are higher. Thus, having a larger user base decreases the likelihood of successful attacks significantly.

- Adopt Robust Aggregation Methods: Different aggregation methods have been proposed to make the resulting model more robust against backdoor attacks. For instance, taking the median (D. Yin et al., 2018) instead of the average reduces the influence of potentially malicious participants who may have manipulated the data or model updates. This approach lessens the effects of outliers and ensures that the final model represents a more accurate average of the benign participants’ contributions.

- Implement Backup Plans: Having a backup plan ready in case the model becomes compromised due to a backdoor attack or other security breaches is crucial. For example, maintaining older versions of the model as backups can allow for a quick rollback to a previous, secure version if the latest model is poisoned or compromised. This ensures that your system can continue to function reliably while you investigate and address the security issue. Additionally, regularly testing and validating backup models helps ensure their integrity and effectiveness in safeguarding against potential threats.

Conclusion

Federated learning is a promising framework that combines the benefits of creating AI models on large quantities of data while also maintaining user data privacy. It does so in a decentralized way of combining local models of the users who trained on their own data. This benefit comes at its drawbacks as it allows the insertion with backdoors. These backdoor attacks compromise the correctness of the models and results adversary directed behaviour. One of such attacks includes altering the pixel intensities of the triggers inserted. As a result, attackers can enhance their attack in backdoor effectiveness and stealthiness of the triggers inserted, making it harder for the defenders to find the attack. Yet, this does not mean that FL is doomed. In fact, with the right measurements and awareness of the weaknesses of FL, the risks of these attacks can be reduced in order to create a safe space for AI deployment. This way, you can enjoy the perks of AI in your everyday life while keeping your personal data secure. So, let your phone wish your friend a happy birthday. Just ensure that it was not supposed to be a surprise party in the first place.

References

Bagdasaryan, E., et al. (2020). How to backdoor federated learning. In: International Conference on Artificial Intelligence and Statistics. PMLR, pp. 2938–2948.

Chen, B.X. (2024) What the arrival of A.I. phones and computers means for our data, The New York Times. Available at: https://www.nytimes.com/2024/06/23/technology/personaltech/ai-phones-computers-privacy.html.

Criado, M. F., et al. (2022). Non-IID data and Continual Learning processes in Federated Learning: A long road ahead. In: Information Fusion, 88, pp. 263–280.

Gupta, A., et al. (2021). Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. In: Array, 10, 100057.

Isaak, J., & Hanna, M. J. (2018). User data privacy: Facebook, Cambridge Analytica, and privacy protection. In: Computer, 51(8), pp. 56–59.

McMahan, B., et al. (2017). Communication-efficient learning of deep networks from decentralized data. In: Artificial intelligence and statistics. PMLR, pp. 1273–1282.

McMahan, B., & Ramage, D. (2017). Federated learning: Collaborative machine learning without centralized training data. In: Google Research Blog. Retrieved from https://blog.research.google/2017/04/federated-learning-collaborative.html

Ruff, K. M., et al. (2021). AlphaFold and implications for intrinsically disordered proteins. In: Journal of Molecular Biology, 433(20), 167208.

Shokri Kalisa, A. et al. (2023). Unmasking the Power of Trigger Intensity in Federated Learning: Exploring Trigger Intensities in Backdoor Attacks (Master Thesis). TU Delft.

Yin, D., et al. (2018). Byzantine-robust distributed learning: Towards optimal statistical rates. In: International Conference on Machine Learning. PMLR, pp. 5650–5659.

Zhang, Z., et al. (2022). Neurotoxin: Durable backdoors in federated learning. In: International Conference on Machine Learning. PMLR, pp. 26429–26446.

Disclaimer

The authors alone are responsible for the views expressed in this article. The views mentioned do not necessarily represent the views, decisions or policies of the ISACA NL Chapter. The views expressed herein can in no way be taken to reflect the official opinion of the board of ISACA NL Chapter. All reasonable precautions have been taken by the authors to verify the information contained in this publication. However, the published material is being distributed without warranty of any kind, either expressed or implied. The responsibility for the interpretation and use of the material lies with the reader. In no event shall the authors or the board of ISACA NL Chapter be liable for damages arising from its us.